[Update 9/1: I have been planning (before any comments, incidentally) to write a version of this post which just provides a concise verbal explanation of the experiment, supplemented perhaps with a little formal calculation. However, I think the discussion below comes to a correct understanding of the experiment, and I will leave it up as an example of how a physicist somewhat conversant with but not usually working in quantum optics reads and quickly comes to a correct understanding of a paper. Yes, the understanding is correct even if some misleading language was used in places, but I thank commenter Andreas for pointing out the latter.]

Thanks to tweeters @AtheistMissionary and @robertwrighter for bringing to my attention this experiment by a University of Vienna group (Gabriela Barreto Lemos, Victoria Borish, Garrett D. Cole, Sven Ramelo, Radek Lapkiewicz and Anton Zeilinger), published in Nature, on imaging using entangled pairs of photons. It seems vaguely familiar, perhaps from my visit to the Brukner, Aspelmeyer and Zeilinger groups in Vienna earlier this year; it may be that one of the group members showed or described it to me when I was touring their labs. I'll have to look back at my notes.

This New Scientist summary prompts the Atheist and Robert to ask (perhaps tongue-in-cheek?) if it allows faster-than-light signaling. The answer is of course no. The New Scientist article fails to point out a crucial aspect of the experiment, which is that there are two entangled pairs created, each one at a different nonlinear crystal, labeled NL1 and NL2 in Fig. 1 of the Nature article. [Update 9/1: As I suggest parenthetically, but in not sufficiently emphatic terms, four sentences below, and as commenter Andreas points out, there is (eventually) a superposition of an entangled pair having been created at different points in the setup; "two pairs" here is potentially misleading shorthand for that.] To follow along with my explanation, open the Nature article preview, and click on Figure 1 to enlarge it. Each pair is coherent with the other pair, because the two pairs are created on different arms of an interferometer, fed by the same pump laser. The initial beamsplitter labeled "BS1" is where these two arms are created (the nonlinear crystals come later). (It might be a bit misleading to say two pairs are created by the nonlinear crystals, since that suggests that in a "single shot" the mean photon number in the system after both nonlinear crystals have been passed is 4, whereas I'd guess it's actually 2 --- i.e. the system is in a superposition of "photon pair created at NL1" and "photon pair created at NL2".) Each pair consists of a red and a yellow photon; on one arm of the interferometer, the red photon created at NL1 is passed through the object "O". Crucially, the second pair is not created until after this beam containing the red photon that has passed through the object is recombined with the other beam from the initial beamsplitter (at D2). ("D" stands for "dichroic mirror"---this mirror reflects red photons, but is transparent at the original (undownconverted) wavelength.) Only then is the resulting combination passed through the nonlinear crystal, NL2. Then the red mode (which is fed not only by the red mode that passed through the object and has been recombined into the beam, but also by the downconversion process from photons of the original wavelength impinging on NL2) is pulled out of the beam by another dichroic mirror. The yellow mode is then recombined with the yellow mode from NL1 on the other arm of the interferometer, and the resulting interference observed by the detectors at lower right in the figure.

It is easy to see why this experiment does not allow superluminal signaling by altering the imaged object, and thereby altering the image. For there is an effectively lightlike or timelike (it will be effectively timelike, given the delays introduced by the beamsplitters and mirrors and such) path from the object to the detectors. It is crucial that the red light passed through the object be recombined, at least for a while, with the light that has not passed through the object, in some spacetime region in the past light cone of the detectors, for it is the recombination here that enables the interference between light not passed through the object, and light passed through the object, that allows the image to show up in the yellow light that has not (on either arm of the interferometer) passed through the object. Since the object must be in the past lightcone of the recombination region where the red light interferes, which in turn must be in the past lightcone of the final detectors, the object must be in the past lightcone of the final detectors. So we can signal by changing the object and thereby changing the image at the final detectors, but the signaling is not faster-than-light.

Perhaps the most interesting thing about the experiment, as the authors point out, is that it enables an object to be imaged at a wavelength that may be difficult to efficiently detect, using detectors at a different wavelength, as long as there is a downconversion process that creates a pair of photons with one member of the pair at each wavelength. By not pointing out the crucial fact that this is an interference experiment between two entangled pairs [Update 9/1: per my parenthetical remark above, and Andreas' comment, this should be taken as shorthand for "between a component of the wavefunction in which an entangled pair is created in the upper arm of the interferometer, and one in which one is created in the lower arm"], the description in New Scientist does naturally suggest that the image might be created in one member of an entangled pair, by passing the other member through the object, without any recombination of the photons that have passed through the object with a beam on a path to the final detectors, which would indeed violate no-signaling.

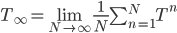

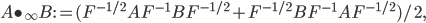

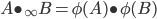

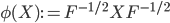

I haven't done a calculation of what should happen in the experiment, but my rough intuition at the moment is that the red photons that have come through the object interfere with the red component of the beam created in the downconversion process, and since the photons that came through the object have entangled yellow partners in the upper arm of the interferometer that did not pass through the object, and the red photons that did not pass through the object have yellow partners created along with them in the lower part of the interferometer, the interference pattern between the red photons that did and didn't pass through the object corresponds perfectly to an interference pattern between their yellow partners, neither of which passed through the object. It is the latter that is observed at the detectors. [Update 8/29: now that I've done the simple calculation, I think this intuitive explanation is not so hot. The phase shift imparted by the object "to the red photons" actually pertains to the entire red-yellow entangled pair that has come from NL1 even though it can be imparted by just "interacting" with the red beam, so it is not that the red photons interfere with the red photons from NL2, and the yellow with the yellow in the same way independently, so that the pattern could be observed on either color, with the statistical details perfectly correlated. Rather, without recombining the red photons with the beam, no interference could be observed between photons of a single color, be it red or yellow, because the "which-beam" information for each color is recorded in different beams of the other color. The recombination of the red photons that have passed through the object with the undownconverted photons from the other output of the initial beamsplitter ensures that the red photons all end up in the same mode after crystal NL2 whether they came into the beam before the crystal or were produced in the crystal by downconversion, thereby ensuring that the red photons contain no record of which beam the yellow photons are in, and allowing the interference due to the phase shift imparted by the object to be observed on the yellow photons alone.]

As I mentioned, not having done the calculation, I don't think I fully understand what is happening. [Update: Now that I have done a calculation of sorts, the questions raised in this paragraph are answered in a further Update at the end of this post. I now think that some of the recombinations of beams considered in this paragraph are not physically possible.] In particular, I suspect that if the red beam that passes through the object were mixed with the downconverted beam on the lower arm of the interferometer after the downconversion, and then peeled off before detection, instead of having been mixed in before the downconversion and peeled off afterward, the interference pattern would not be observed, but I don't have clear argument why that should be. [Update 8/29: the process is described ambiguously here. If we could peel off the red photons that have passed through the object while leaving the ones that came from the downconversion at NL2, we would destroy the interference. But we obviously can't do that; neither we nor our apparatus can tell these photons apart (and if we could, that would destroy interference anyway). Peeling off *all* the red photons before detection actually would allow the interference to be seen, if we could have mixed back in the red photons first; the catch is that this mixing-back-in is probaby not physically possible.] Anyone want to help out with an explanation? I suspect one could show that this would be the same as peeling off the red photons from NL2 after the beamsplitter but before detection, and only then recombining them with the red photons from the object, which would be the same as just throwing away the red photons from the object to begin with. If one could image in this way, then that would allow signaling, so it must not work. But I'd still prefer a more direct understanding via a comparison of the downconversion process with the red photons recombined before, versus after. Similarly, I suspect that mixing in and then peeling off the red photons from the object before NL2 would not do the job, though I don't see a no-signaling argument in this case. But it seems crucial, in order for the yellow photons to bear an imprint of interference between the red ones, that the red ones from the object be present during the downconversion process.

The news piece summarizing the article in Nature is much better than the one at New Scientist, in that it does explain that there are two pairs, and that the one member of one pair is passed through the object and recombined with something from the other pair. But it does not make it clear that the recombination takes place before the second pair is created---indeed it strongly suggests the opposite:

According to the laws of quantum physics, if no one detects which path a photon took, the particle effectively has taken both routes, and a photon pair is created in each path at once, says Gabriela Barreto Lemos, a physicist at Austrian Academy of Sciences and a co-author on the latest paper.

In the first path, one photon in the pair passes through the object to be imaged, and the other does not. The photon that passed through the object is then recombined with its other ‘possible self’ — which travelled down the second path and not through the object — and is thrown away. The remaining photon from the second path is also reunited with itself from the first path and directed towards a camera, where it is used to build the image, despite having never interacted with the object.

Putting the quote from Barreta Lemos about a pair being created on each path before the description of the recombination suggests that both pair-creation events occur before the recombination, which is wrong. But the description in this article is much better than the New Scientist description---everything else about it seems correct, and it gets the crucial point that there are two pairs, one member of which passes through the object and is recombined with elements of the other pair at some point before detection, right even if it is misleading about exactly where the recombination point is.

[Update 8/28: clearly if we peel the red photons off before NL2, and then peel the red photons created by downconversion at NL2 off after NL2 but before the final beamsplitter and detectors, we don't get interference because the red photons peeled off at different times are in orthogonal modes, each associated with one of the two different beams of yellow photons to be combined at the final beamsplitter, so the interference is destroyed by the recording of "which-beam" information about the yellow photons, in the red photons. But does this mean if we recombine the red photons into the same mode, we restore interference? That must not be so, for it would allow signaling based on a decision to recombine or not in a region which could be arranged to be spacelike separated from the final beamsplitter and detectors. But how do we see this more directly? Having now done a highly idealized version of the calculation (based on notation like that in and around Eq. (1) of the paper) I see that if we could do this recombination, we would get interference. But to do that we would need a nonphysical device, namely a one-way mirror, to do this final recombination. If we wanted to do the other variant I discussed above, recombining the red photons that have passed the object with the red (and yellow) photons created at NL2 and then peeling all red photons off before the final detector, we would even need a dichroic one-way mirror (transparent to yellow, one-way for red), to recombine the red photons from the object with the beam coming from NL2. So the only physical way to implement the process is to recombine the red photons that have passed through the object with light of the original wavelength in the lower arm of the interferometer before NL2; this just needs an ordinary dichroic mirror, which is a perfectly physical device.]